From digital voice assistants to smart home devices, artificial intelligence (AI) is becoming common in our everyday lives. Just like these AI tools help make us more efficient, AI-based image analysis tools promise to do the same. In metallography and materialography, AI opens a new world of easier and more accurate image analysis.

Solving the Challenges of Image Thresholding in Microstructure Analysis

Consider the challenges of conventional threshold-based analyses used in the microstructure analysis of metals, alloys, ceramics, composites, and other materials. While it is an established method for microstructure analysis, image thresholding has some limitations.

For example, thresholding doesn’t detect specific structures in the images. Instead, it detects multiple objects at once without distinguishing between them. Analytical algorithms such as thresholding can use other approaches, such as edge enhancement filters, shading correction, and morphological analyses, to find specific structures. The problem is that these approaches require both programming skills and effort to enable automated analyses. Also, some problems may not be fixable with these approaches, considering the potentially huge number of special cases and exceptions.

In contrast, machine learning forms rules for object detection based on multiple examples of representatives of objects of interest. Automated evaluation, based on deep artificial neural networks that have learned to classify image areas independently of previously set threshold values in the image, makes image analysis easier and more accurate.

An Example of AI-Assisted Image Analysis in Metallography and Materialography

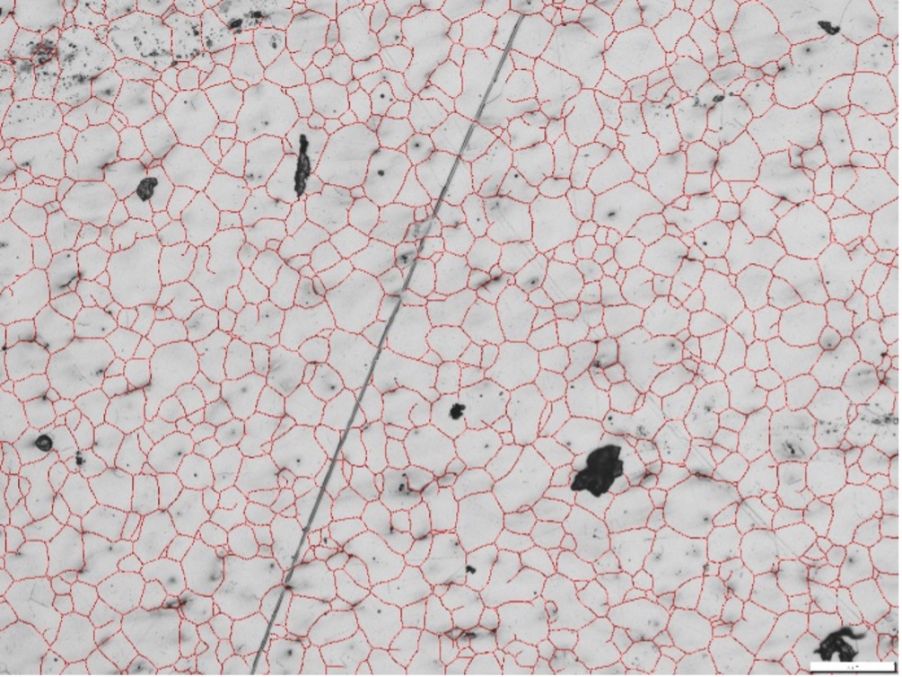

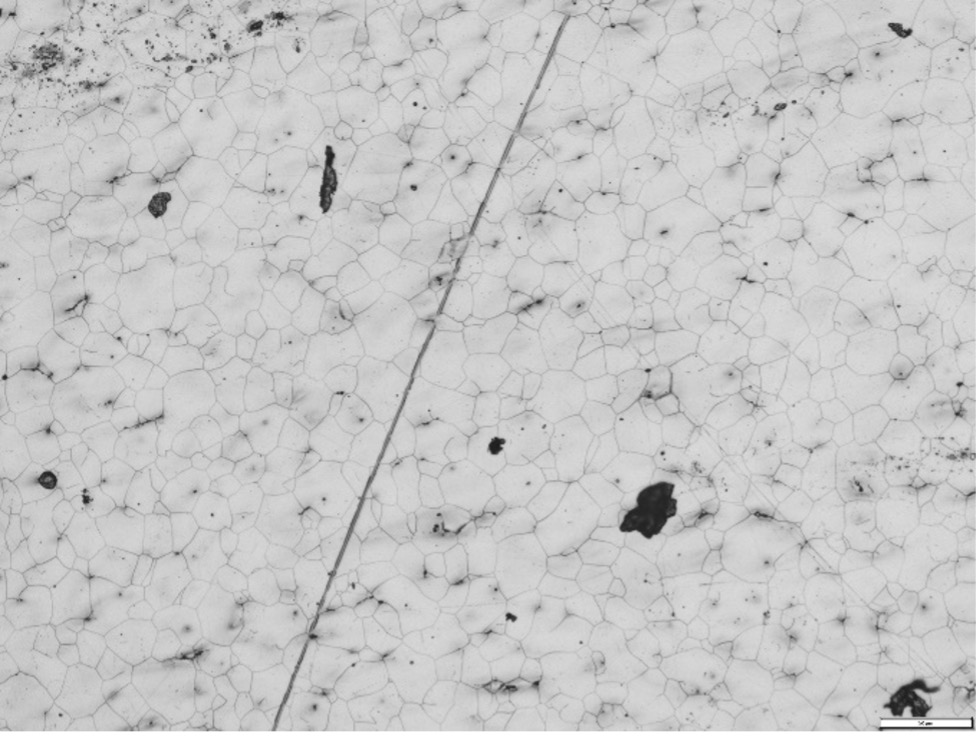

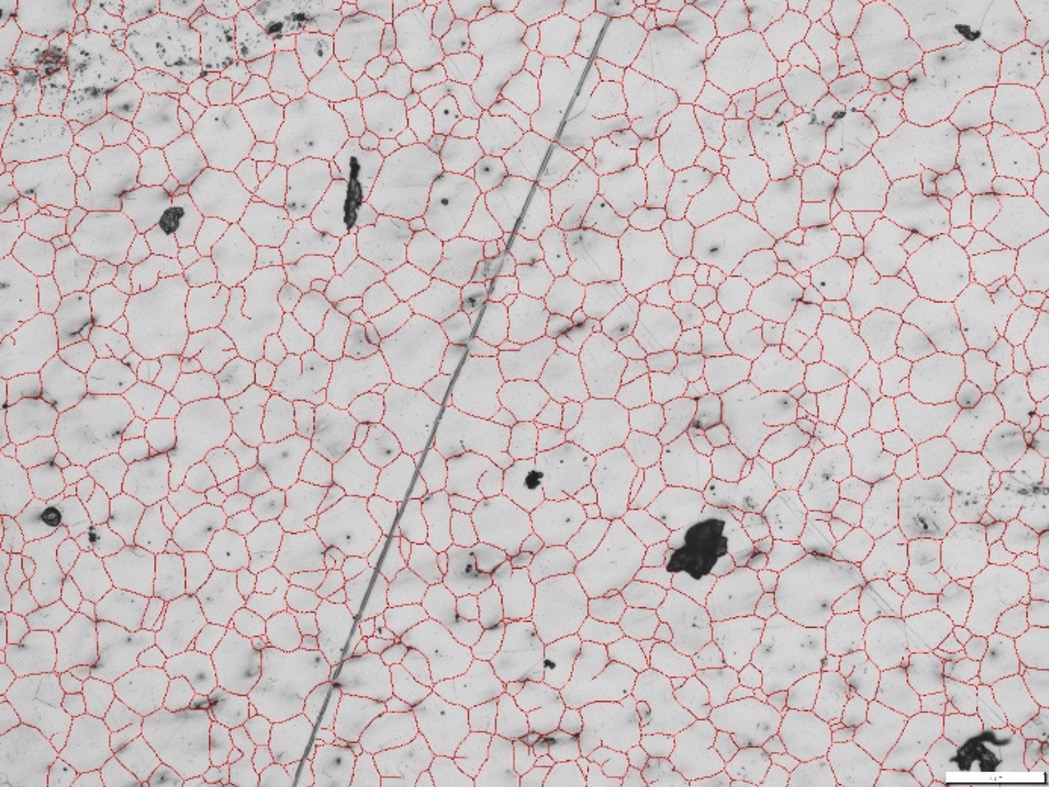

To see how AI improves image analysis, let’s review an example. The image below shows a metallographic sample with grain boundaries, polishing marks, and dust (Figure 1).

Figure 1. Metallographic section with grain boundaries, polishing marks, and dust.

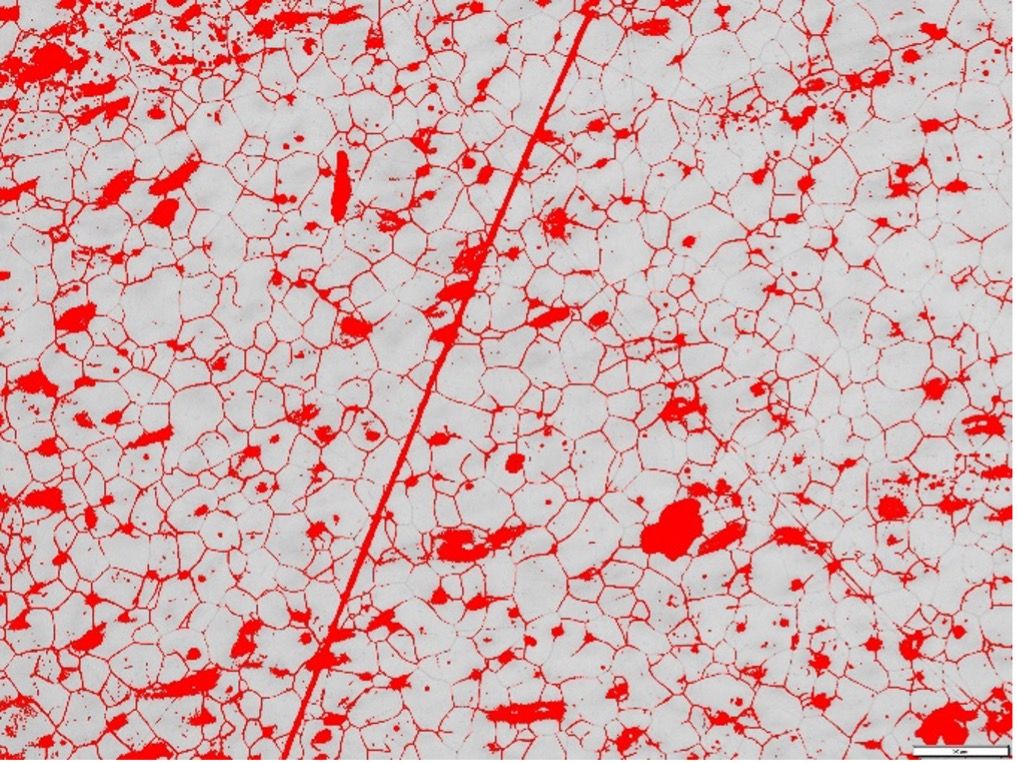

With the simple threshold setting, the image analysis software can’t clearly distinguish the grain boundaries from the polishing marks and dust (Figure 2). This results in an incorrect grain size measurement since it’s impossible to detect only the grain boundaries.

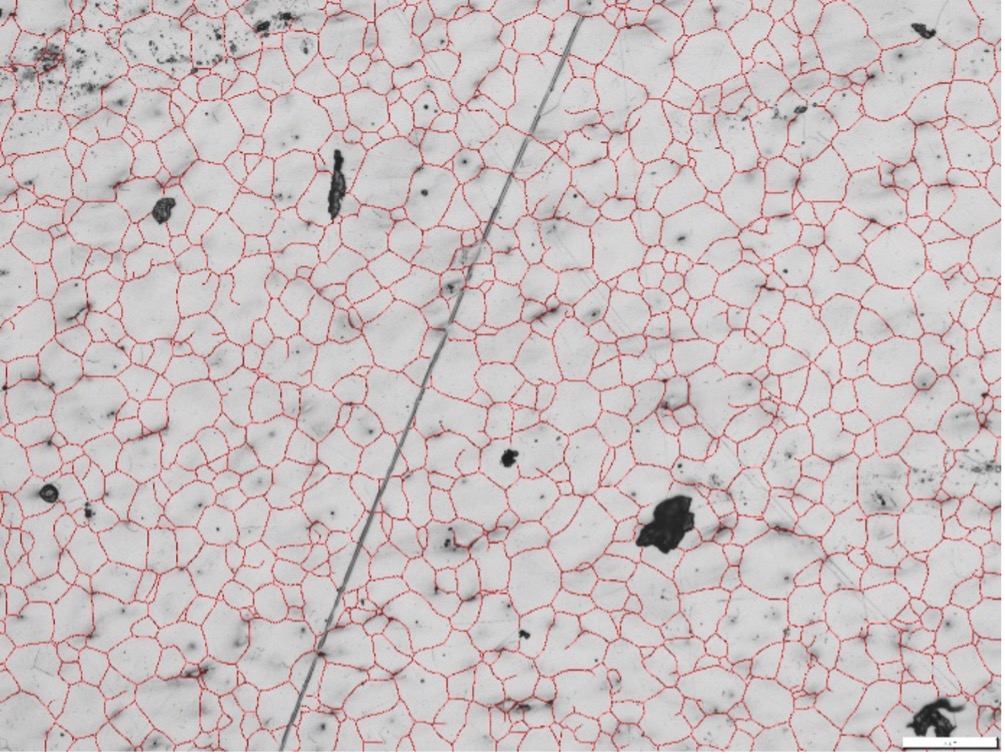

Now let’s take a look at the same image analyzed with AI (Figure 3). Here traces of grinding, polishing, dust, and residues are distinguished from objects of interest, such as grain boundaries, in images of polished sections. That’s because AI-based image analysis can detect grain boundaries in microstructures that have very inhomogeneous grain structures with reliability and reproducibility. Further, structural components can be classified with pixel accuracy.

Figure 3. The same metallographic section analyzed with TruAI deep learning technology. The AI analysis clearly shows the detected grain boundaries (red), distinguishing them from the polishing marks and dust.

AI-Assisted Microstructure Analysis Using Industrial Imaging Software

The latest industrial image analysis software includes artificial intelligence to assist with analyzing complex images. Our very own PRECiV™ image and measurement software now offers TruAI™ deep-learning technology. You can now apply a trained neural network to images for higher reproducibility and more robust analysis.

One handy feature of TruAI technology is instance segmentation, which splits touching objects into separate ones. This powerful segmentation method is helpful for complex images with objects that touch, such as grains, powder-based materials used in sintering and additive manufacturing, particles, and defects. Users can train a network using instance segmentation and apply it with one click to separate objects.

TruAI technology also lets you train neural networks with semantic segmentation, which identifies if a pixel belongs to the background or foreground. Unlike instance segmentation, it cannot distinguish between merged objects. As a result, semantic segmentation requires some manual post-processing work to perform instance segmentation. This makes semantic segmentation better for easier analysis tasks, such as well-separated objects or when object separation is irrelevant. Semantic segmentation is helpful for distinguishing between large areas, such as in phase analysis, detection of welding points, heat-affected zone analysis, and paint layer analysis.

For flexibility, both semantic and instance segmentation methods are available in the Count and Measure solution and select Materials Solutions in PRECiV software.

How to Properly Train a Neural Network for Metallography and Materialography

As illustrated above, applying a trained neutral network to images is an efficient way to analyze microstructures. However, the network must be trained properly for accurate and reproducible image analysis. Training an optimal neural network requires carefully labeled examples, as the resulting algorithm is based on the labeled data. In other words, the better the training, the better the image processing. For this reason, it’s critical a specialist in the materials analysis application is involved in the training process.

Here’s the typical workflow to properly train a deep neural network for industrial image analysis:

1. Label images and verify data for the training.

For deep-learning image analysis, the labeling of data requires creating images with ground truth. In simple terms, ground truth is the information with which the neural network is trained and evaluated. Mark the ground truth in the image through image processing or hand labeling so the network can learn from these labels.

A specialist must verify the training data. Only a specialist can define which data should be used for the training as it is the source the trained neural network uses for the analysis. The specialist must be an expert in the material analysis application so they can make decisions about which details in the image the network should detect.

Consider the example of a metallographic section (Figure 1, left). The specialist might ask: When is the feature considered a grain boundary? How do we evaluate abnormalities? The data must be representative of all expected objects and mappings within each class.

2. Train the neural network.

The next step is to select the optimal training configuration for the task. This is done using instructions for augmenting the training data and selecting the training model.

Augmenting the training data supports the training by giving the neural network model significantly more opportunities to learn and increase its reliability. The training data is multiplied by rotation, mirroring, and other image operations.

It is important to note which augmentation method makes sense for the specific application. For instance, rotation is useful for structures with no preferred direction but not useful for elongated materials such as rolled materials.

3. Validate the training success.

In deep learning, an artificial neural network with a given structure is created, but the decision-making process that the network uses is hidden. The network does not provide any analytical justification as to why a decision is made.

That’s why validation is essential. A specialist can validate the training success by seeing if the results from an analysis fit the expectations. Validation data sets enable you to compare how well the trained artificial neural network can recognize the specified image areas.

You can also use the network to generate a probability map, which can be displayed during training on labeled validation images as an overlay. Note that the validation data are not part of the data set that was used to train the network. To achieve a realistic assessment of the training status, the similarity between quality criteria, such as loss, evaluated on the training images and the validation images, can be output numerically and as a graph.

After you have performed a training that includes validation, a new data set is used to check whether the algorithm still works on representative new data: the test data set. A specialist, or ideally multiple experts, must verify this final test to reduce the risk of misinterpretations of AI results due to human bias.

4. Apply the trained neural network to comparable images.

Now the trained neural network is available as a segmentation method. You can apply it to comparable images, such as images with similar light and exposure conditions. Applying a properly trained neural network is simple in intuitive industrial imaging software, such as PRECiV software. With a single click, the network automatically segments the image and delivers reproducible results.

Learn More about Deep Learning in Metallography and Materialography

To learn more about AI-based image analysis, including its benefits, how it works, and how to apply it in your work, check out our TruAI resource center. Our experts are happy to help you work AI into your specific material analysis application.

Analyzing Coal Combustion Residuals Using the Power of Deep-Learning Image Segmentation

TruAI Deep-Learning Technology for Industrial Image Analysis